Ajax Upload a User Selected File to Aws S3

Securing File Upload & Download with Using AWS S3 Bucket Presigned URLs and Python Flask

AWS S3 Buckets are a peachy way to store information for web applications, particularly if the information files are large. But how tin we grant access "public web application users" to upload files to "private S3 buckets" or download from there? How tin we brand sure our Bucket is safe and no one else sees the other files that they are not authorized to? In the post-obit sections, we volition learn secure ways to upload or download information from private S3 Buckets while creating a simple Python Flask spider web awarding.

Check out the example project on Github.

List of content:

1. What is AWS S3 Bucket?

2. Who can access the objects in AWS S3 Bucket?

3. What is "Presigned URL"?

4. Creating AWS S3 Bucket

five. Creating AWS IAM user and limit the permissions

half-dozen. Creating Python module for Bucket processes

7. Creating Flask application

8. Creating HTML folio and AJAX endpoints

9. How can nosotros brand accessing Saucepan objects more than secure?

What is AWS S3 Saucepan?

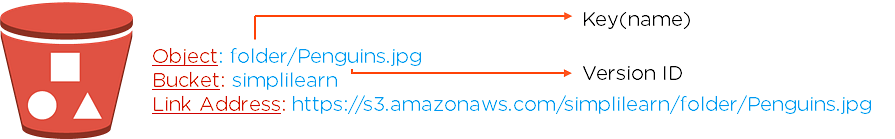

An AWS S3 (Amazon Simple Storage Service) is an online object storage service and offers scalability, information availability, security, and functioning. The "Buckets", similar to file folders, tin store objects (any type of data file) and their descriptive metadata.

A Bucket is used to store objects and the objects are defined by "Key" which simply means filename. If a bucket is public and someone wants to accomplish the object in it, `https://s3.amazonaws.com/<bucket>/<key>` can be used.

Who can access the objects in AWS S3 Bucket?

It depends on who you lot are allowing access to. Buckets tin exist created equally Private and Public privileges. If it is created as Public, anyone tin can admission the bucket and objects in it. Otherwise, if it is created as Individual, only allowed users can access data and the users can exist chosen via AWS IAM (Identity and Access Management). With IAM, user authorization tin also be gear up, which is named "Policies".

What is "Presigned URL"?

Presigned URLs can exist used to upload, download or delete files by people who don't take any access to the saucepan. Presigned URLs grant temporary access to specific S3 objects and can just exist created by users who have persistent admission.

Using presigned URLs provides many important advantages:

- Saucepan doesn't need to exist public, so private data (like users' individual files on a spider web application) tin can be stored securely in the same bucket.

- If a user, who doesn't have admission to the saucepan, wants to upload or download information; they even so tin can download or upload data via presigned URLs.

- Presigned URLs can exist created at the server-side, so users cannot access sensitive data similar API keys and cannot create URLs by themselves.

- Presigned URLs cannot be changed and are time-limited. So a user, who has the presigned URL of an object, cannot access another object by changing the signature of the URL and cannot employ this URL forever.

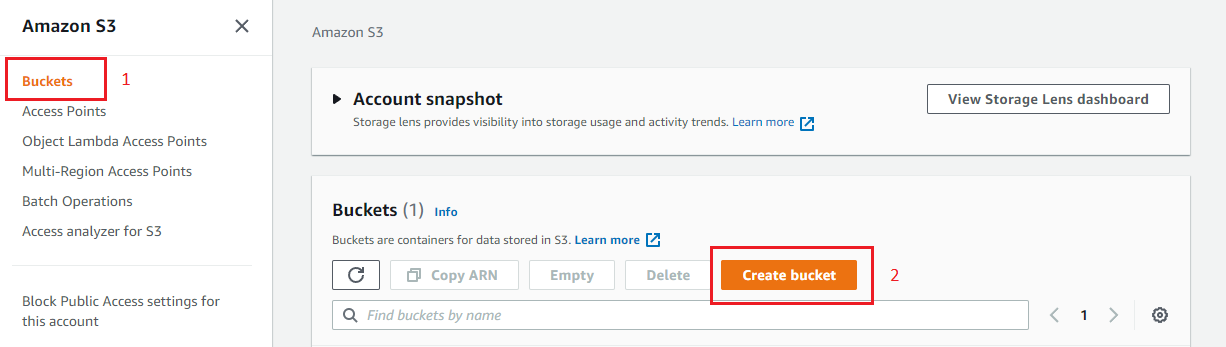

Creating AWS S3 Bucket

First, let's create an AWS S3 Bucket. Navigate to AWS S3 Saucepan and click the "Create bucket" push.

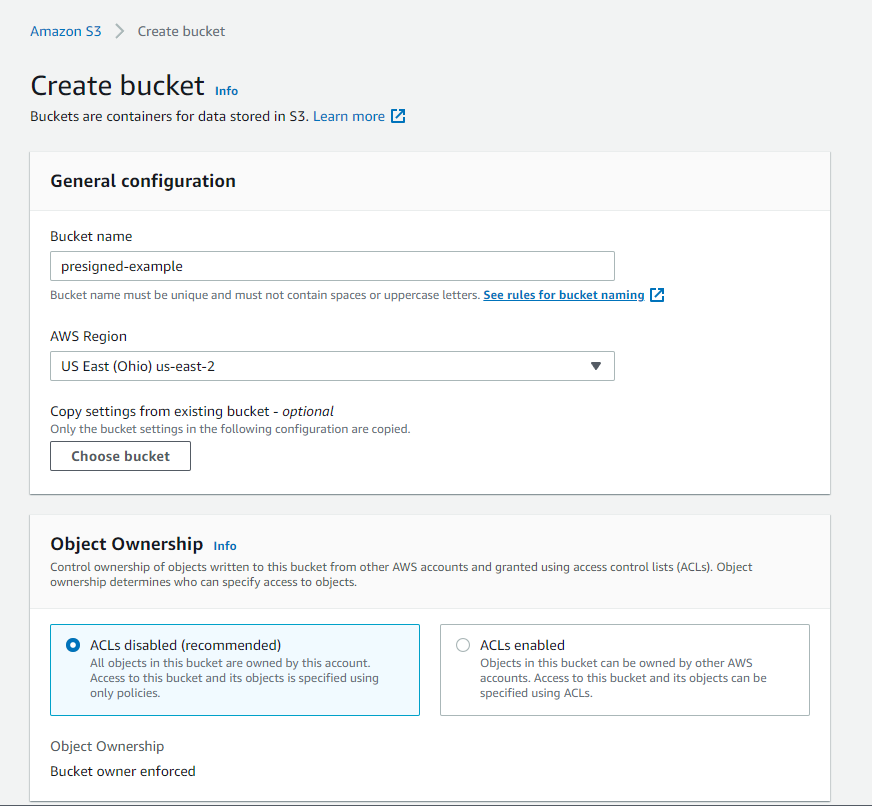

- Select bucket name, it must be unique (check out the naming rules)

- Select AWS Region or leave it as default.

- Select ACLs disabled for Object Ownership.

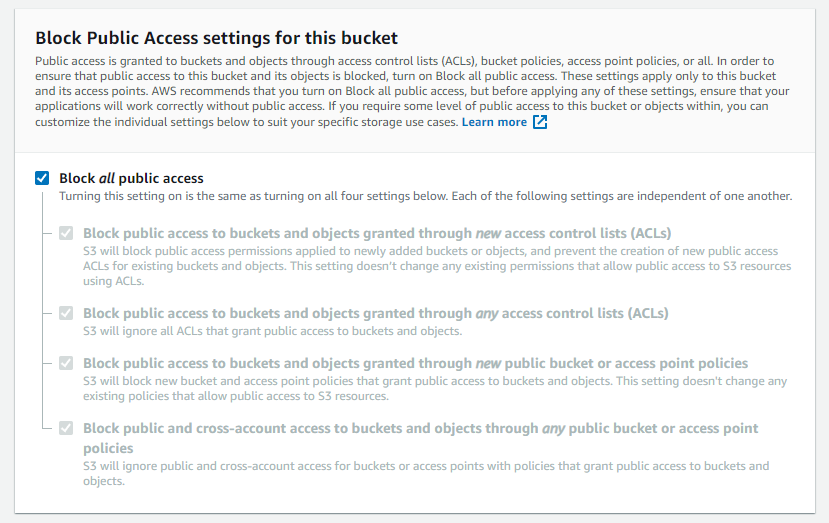

iv. Make certain "Block all public access" is checked. This makes S3 Bucket private and simply the users nosotros grant access can accomplish the bucket. We will create access granted users afterward on AWS IAM.

five. Leave the other sections as default.

six. Click the "Create Bucket" button.

Creating AWS IAM user and limit the permissions

The second affair we need to do is create an IAM user which will have express access to the S3 Bucket nosotros've been created. After creating a user, we will become access credentials (Access Key ID and Secret Access Key) and nosotros volition use that information in our web application.

Let's create a policy to ascertain permissions for the user we will create later.

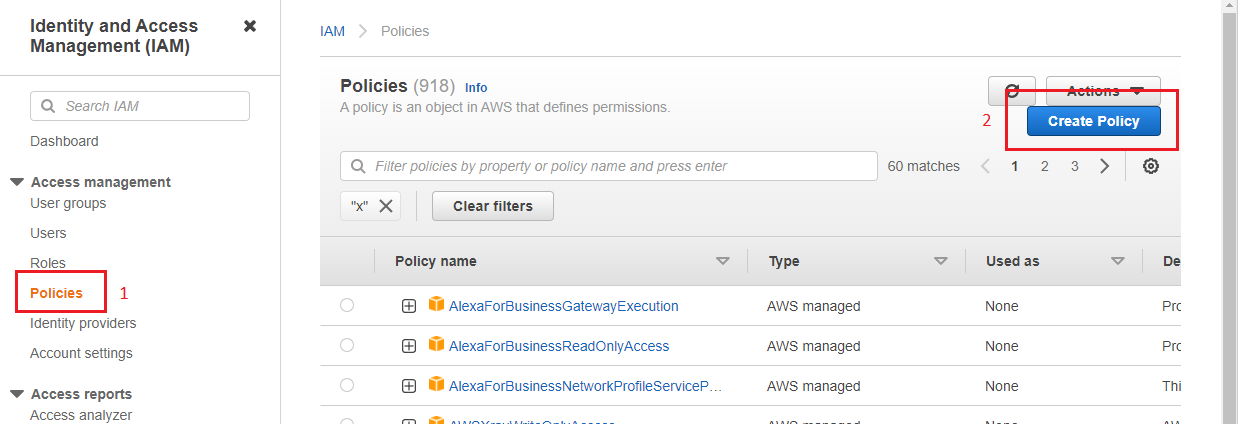

i. Get to AWS IAM Homepage, select Policies, and click the "Create Policy" button.

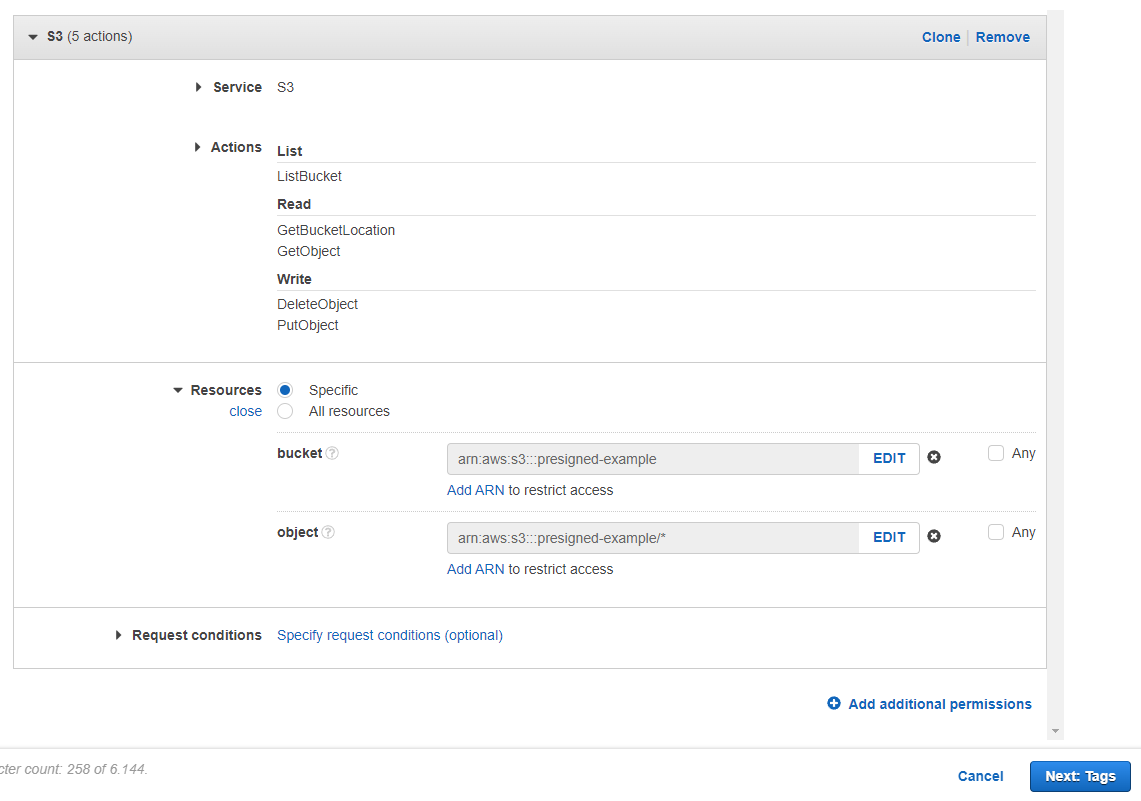

ii. Select "S3" in the "Service" department,

3. Select the following permissions in the "Action" section. If you don't demand to have some of the permissions, you don't have to select them.

- ListBucket : For list files in Bucket

- GetBucketLocation : For list files in Saucepan

- GetObject : Download object permission

- DeleteObject : Delete object permission

- PutObject : Upload object permission

4. In the "Resources" section, we should cull which S3 Bucket we grant permission to the user to use. We tin choose all buckets, but we volition limit the user to utilize just the bucket we've created before. So, write saucepan proper noun and cull "Whatever" for objects.

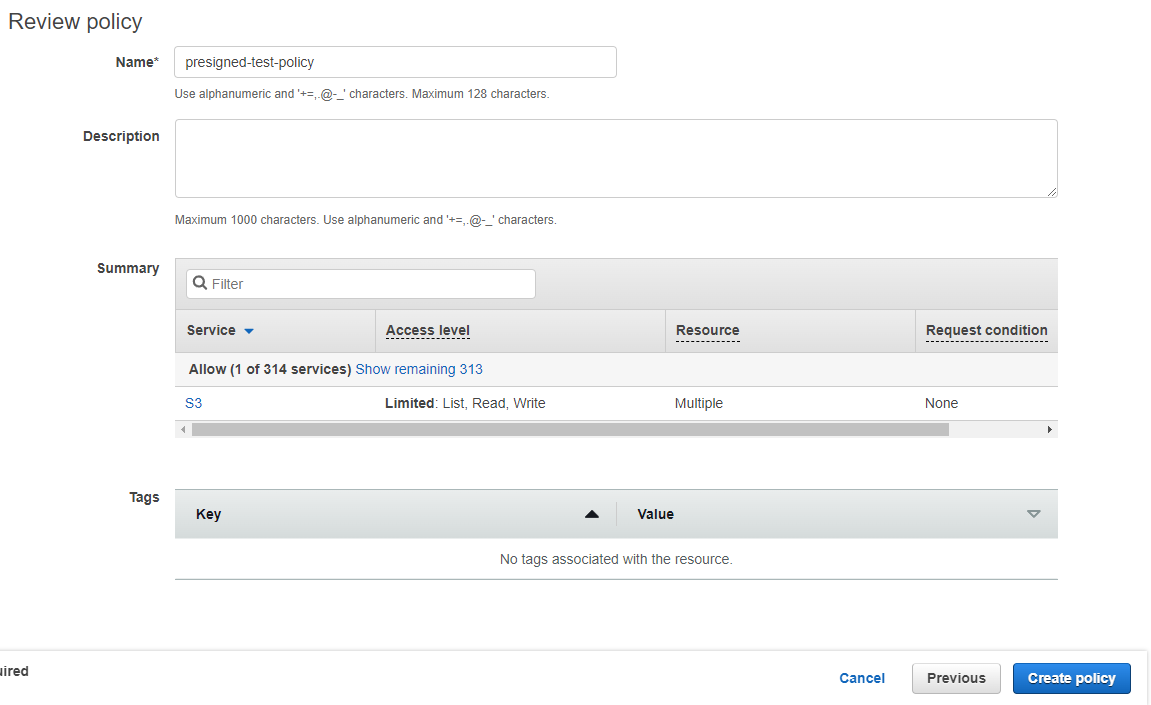

5. Leave the other section as default and next to the other steps. Lastly, name the policy and click the "Create policy" push.

After creating a user policy, we can create a user who volition manage Bucket processes.

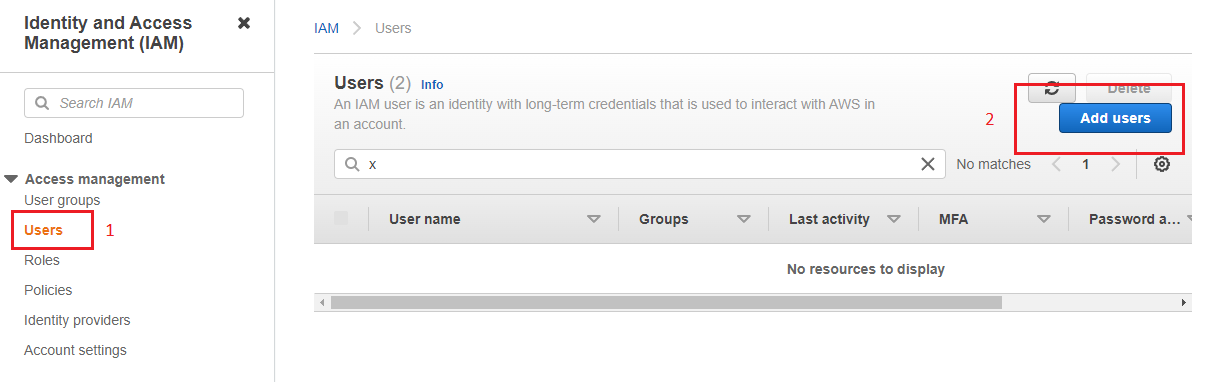

i. Go to AWS IAM Homepage, select "Users" and click the "Add users" button.

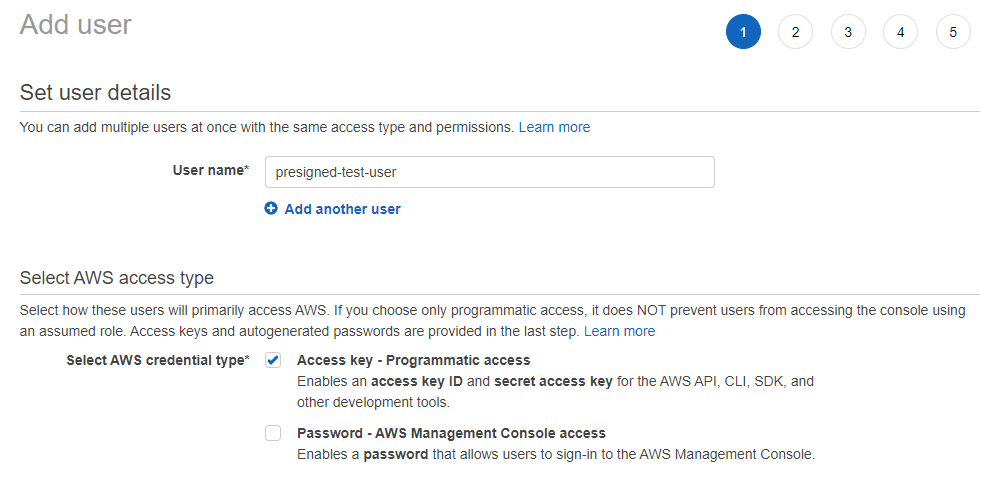

2. Name the user and select "Access cardinal - Programmatic access" and click the "Next" button.

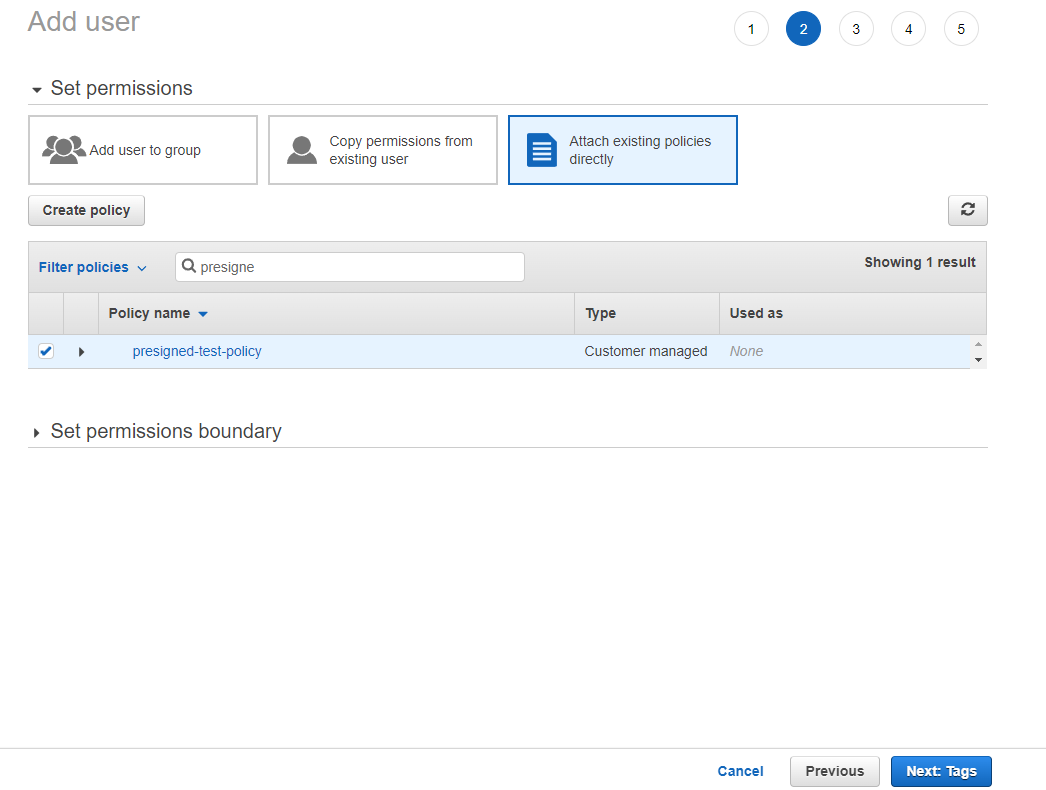

3. For permissions, select "Adhere existing policies directly", notice and select the policy nosotros've created before, then click the "Side by side" button.

4. Leave the other sections as default and create the user. Afterward that, AWS shows "Admission key ID" and "Hole-and-corner access key". The AWS admission credentials must be noted for afterward use.

Creating Python module for Bucket processes

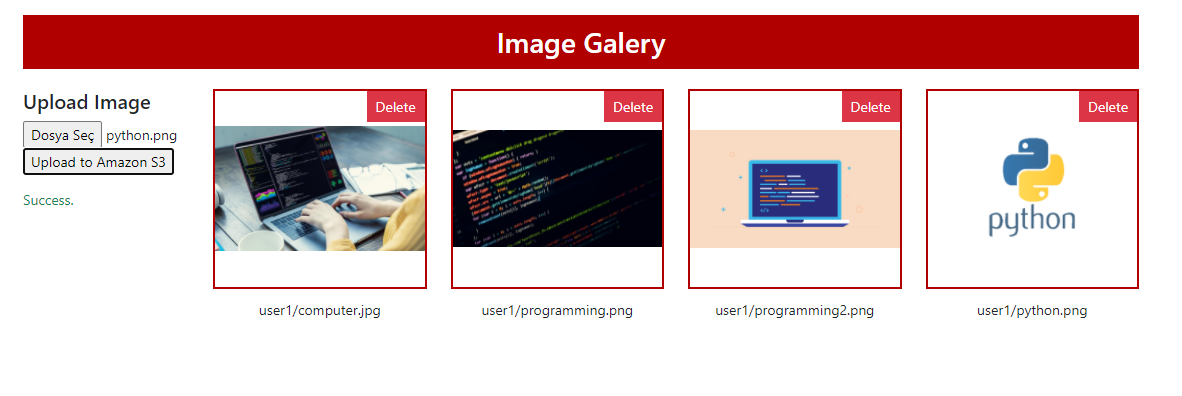

Later creating S3 Saucepan and IAM User, we tin start programming our web awarding. The awarding will apply the Python Flask library as the backend for serving data and JQuery for Ajax requests.

Firstly, we volition create a lib.pyfile and insert a class that is responsible for downloading and uploading files to our AWS Bucket. We will utilize the Boto3, the official AWS Python module (Boto3 Documentation).

The code to a higher place has a class named AwsBucketApiand two functions in it. get_settingsmethod reading settings.jsonfile; getting AWS credentials, bucket name, and bucket region. The JSON file should be like that:

The __init__function creates the cocky.bucket object that indicates which AWS service and credentials we will utilize. In the following functions, we will use that object to connect S3 Bucket.

Presigned URL for getting file

This method gets a filename (filenames are defined as Key on AWS) and returns a presigned URL string. We tin can show that URL to the user who nosotros want to access the file. Normally all objects are private (we define that when creating the bucket), yet we will create a temporary public URL (the URL is fourth dimension-express, look at the expires) and anyone who has the URL tin can admission information. As you tin see in the .generate_presigned_url() role, nosotros define bucket name and filename at the offset, so if someone changes the signature of created URL, cannot achieve any other bucket or objects.

Presigned URL for uploading file

Same as the presigned go URL, the method to a higher place will create a URL for public upload and some other fields. We will create a form and we will use the url value as action url, the fields values as hidden inputs. As earlier, we will grant users to upload files to the bucket who normally don't accept any permissions for that.

List and Delete Functions

These methods are not relevant to the presigned URL concept, but it'd be ameliorate to take. Presigned URLs are used just for two deportment: Upload file and get/download file . All other processes (like listing or deleting files) must be washed at the backend and user permissions must be checked by the developer. For case, with the delete_file method, if a user sends a filename that belongs to someone else, the method volition delete the file; and so before doing that, user permissions must be checked.

Creating Flask awarding

The Flask application basically has 3 endpoints:

-

/: Homepage. It returns the `index.html` file which has file upload form and user file list. (In this example nosotros will but use image files.) -

/get_images: Ajax endpoint to go user files. It returns a dict object which includes `filename` and presigned get `url` -

/delete_images: Ajax endpoint to delete file. It gets a filename and deletes the file.

Creating HTML page and AJAX endpoints

The terminal stride is creating `index.html` file and using the methods above in that HTML page with Ajax methods. Full lawmaking is down beneath and has these sections:

-

section-1: This section has a class for uploading files. Thehomepage()method inmain.pyfile gets presigned postal service fields past usinggenerate_presigned_post_fields()method inlib.pyfile, and the method returns a dictionary that has presigned posturl(str)and signaturefields(dict). For creating the upload form; we should include thatfieldsequally hidden inputs (keys are input name and values are input value) and applyurlas action URL. -

section-2: This is a basic image listing department which is filled by ajax atsection-3. -

department-3: This ajax method sends a request to the/get-imagesendpoint and gets a list of dict which has presigned gourl(str)andfilename(str). If ajax is successful, it places the data in the table atsection-two. -

department-four: This ajax method serializes class data atsection-1and sends it to the presigned post URL which was created before and placed to theactivenessproperty of the grade. -

section-5: This ajax method is responsible for deleting files.

How can we make accessing Bucket objects more secure?

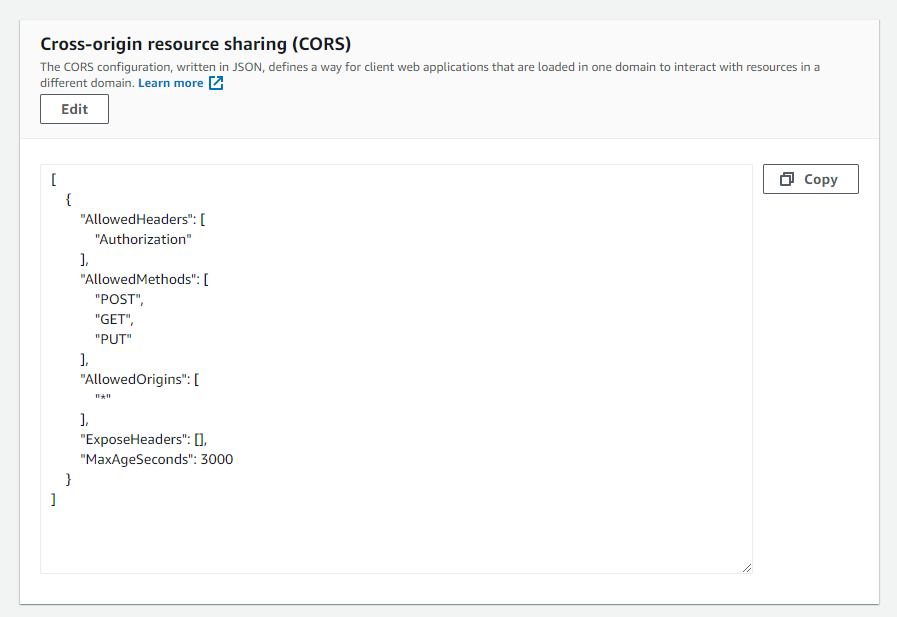

Cross-origin resource sharing (CORS)

AWS S3 has CORS (Cross-origin resource sharing) configuration back up. CORS defines a way for client web applications that are loaded in 1 domain to interact with resources in a different domain. With S3 CORS configuration support, nosotros can;

- identify the origins that nosotros volition allow to access our bucket

- specify HTTP methods to allow

- limit the mail service or get header keys

- ascertain max-age in seconds for browsers can enshroud the response.

The detailed documentation is hither and here.

To add a CORS configuration to an S3 bucket,

- In the Buckets list, cull the name of the bucket that you lot want to create a saucepan policy for.

- Choose Permissions.

- In the Cross-origin resource sharing (CORS) section, cull Edit.

- In the CORS configuration editor text box, type or copy and paste a new CORS configuration, or edit an existing configuration. The CORS configuration is a JSON file. The text that y'all type in the editor must be valid JSON.

- Choose Save changes.

Bucket policy

Saucepan policy is another way to limit resources, actions and define conditions. For more than data check out Policies and Permissions in Amazon S3.

Source: https://medium.com/@serhattsnmz/securing-file-upload-download-with-using-aws-s3-bucket-presigned-urls-and-python-flask-a5c372436f6